Going Viral

Neural Network-Generated Celebrity PSAs

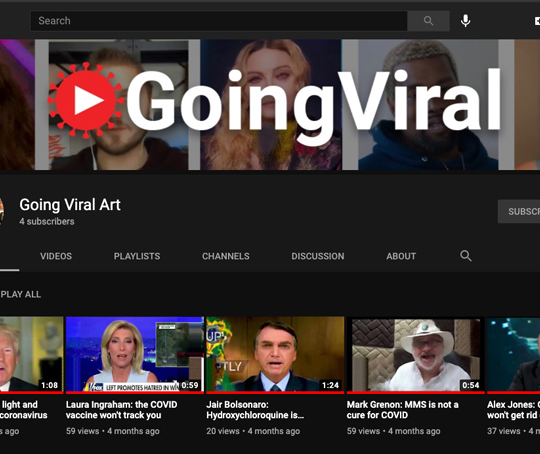

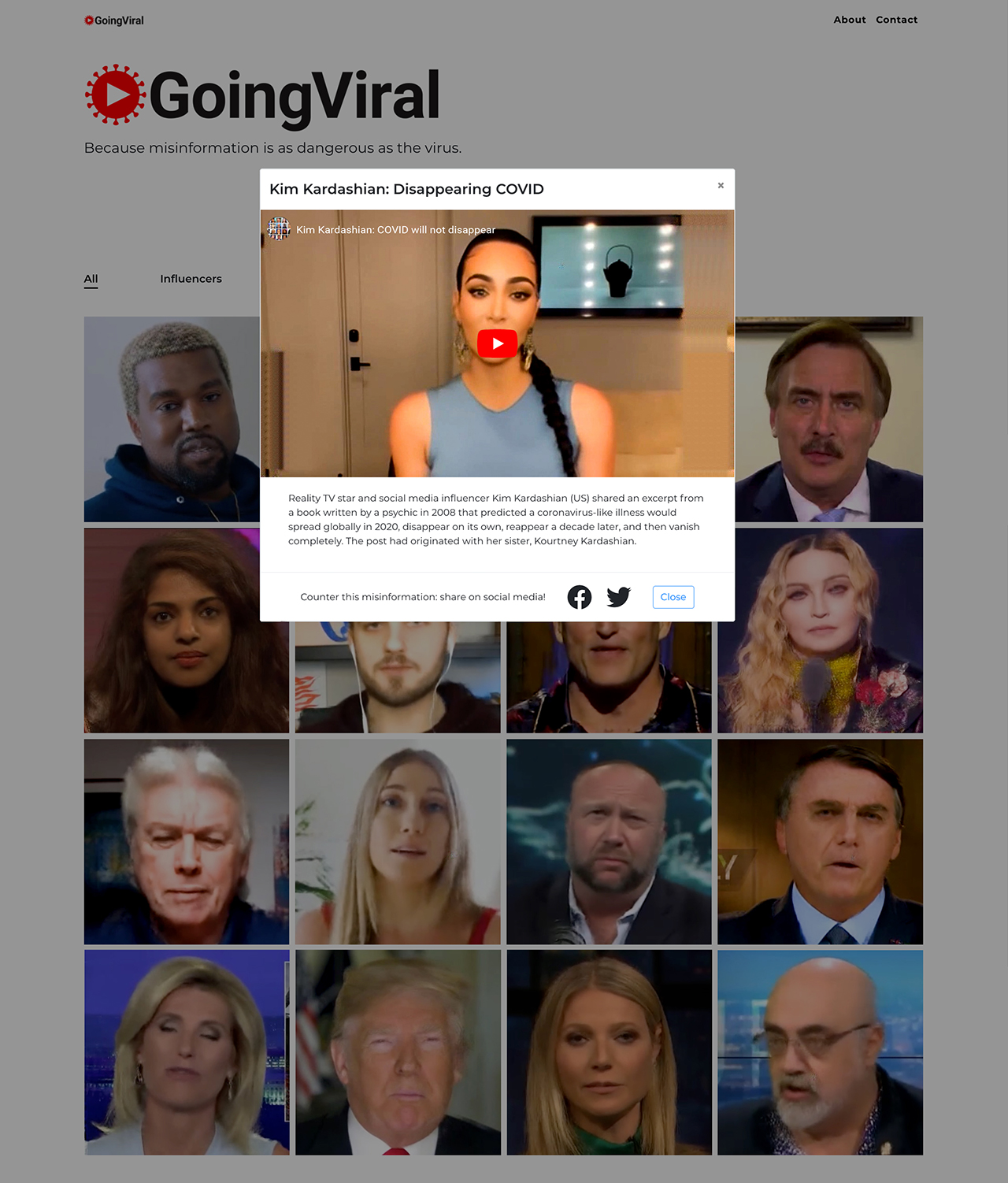

Going Viral is an interactive artwork that invites people to intervene in the spreading of misinformation by sharing informational videos about COVID-19 that feature algorithmically generated celebrities, social media influencers, and politicians that have previously shared misinformation about coronavirus. In the videos, the influencers deliver public service announcements or present news stories that counter the misinformation they have promoted on social media.

The sharable YouTube videos are made using a conditional generative adversarial network (cGAN) that is trained on sets of two images where one image becomes a map to produce a second image, resulting in a glitchy reconstruction of the speaker. The recognizable, but clearly digitally-produced aesthetic prevents the videos from being classified as “deepfakes” and removed by online platforms, while inviting viewers to reflect on the constructed nature of celebrity, and question the authority of celebrities on issues of public health and the validity of information shared on social media. Celebrities and social media influencers are now entangled in the discourse on public health, and are sometimes given more authority than scientists or public health officials. Like the rumors they spread, the online popularity of social media influencers and celebrities is amplified through neural network-based content recommendation algorithms used by online platforms.

Process

The videos in Going Viral are made using a Pix2Pix conditional generative adversarial network (cGAN). In a cGAN, a neural network is trained on sets of two images where one image becomes a map to produce a second image. In Going Viral, the two images are landmarks from facial recognition and a frame from a video. Once the model is trained, it can be used to generate an image of a face based on only the facial landmarks from the first image.

The process starts by extracting the facial landmarks of an influencer, celebrity, or politician from frames of a video. A model that maps the landmarks to a specific image of the influencer is then trained. Next, we take video of an expert or journalist speaking on a topic the influencer has spread misinformation about and extract the facial landmarks from that expert. We then use the facial landmarks of the second speaker to generate video frames of influencer, celebrity, or politician speaking the same words. Finally, the new frames are combined with the audio track of the expert or journalist to produce a new video where an influencer is correcting the misinformation they have spread.

Citations:

- Metrópolis TV, Feature on Piksel XX, “Going Viral”, February 15, 2023.

- Ana Hine, “Given to Chance,” NEoN Digital Arts, November 24, 2020.

- Dejan Grba, "Deep Else: A Critical Framework for AI Art" Digital 2, no. 1: 1-32, 2022.

- “Going Viral,” Issues in Science and Technology Magazine, Coronavirus Pandemic Creative Responses Archive, Cultural Programs of the National Academy of Sciences (CPNAS).