Boogaloo Bias

Facial Recognition by Any Memes Necessary

Visit the Boogaloo Bias website

Boogaloo Bias is an interactive artwork and research project that highlights some of the known problems with the unregulated use of facial recognition technologies.

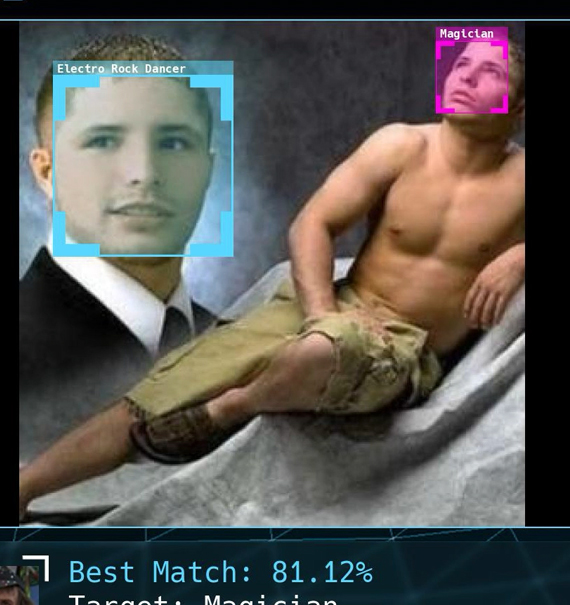

One of these practices is ‘brute forcing’ facial recognition. This is done when there are no high-quality image of a suspect because the surveillance footage is too grainy, the camera is in a suboptimal position, or the lighting quality is too poor to capture a clear image of a suspect’s face. In these cases, law enforcement have been known to substitute images of celebrities the suspect is reported to resemble. To lampoon this approach, the Boogaloo Bias facial recognition algorithm is trained only on faces of characters from the 1984 movie Breakin’ 2: Electric Boogaloo to search for members of the anti-law enforcement militia, the Boogaloo Bois.

The film is the namesake for the Boogaloo Bois, who emerged from 4chan meme culture and have been present at both right and left-wing protests in the US since January 2020. The system is used to search live video feeds, protest footage, and images that are uploaded to the Boogaloo Bias website. All matches made by the system are false positives. No information from the live feeds or website uploads is saved or shared.

Boogaloo Bias raises questions about automated decision making, public accountability, and oversight within a socio-technical system where machines are contributing to a decision-making process.

Facial recognition technology allows for the quick surveillance of hundreds of people simultaneously and the ability to automate decisions using artificial intelligence, establishing a power structure controlled by a technocratic elite. Rather than providing a solution for how to improve facial recognition, Boogaloo Bias pushes the logic behind the current uses of facial recognition in law enforcement to an extreme, highlighting the absurdity of how this technology is being developed and used. Law enforcement currently uses images of celebrity doppelgängers to find suspects. In Boogaloo Bias, the corpus of training images is based solely on fictional characters, leading only to false positives.

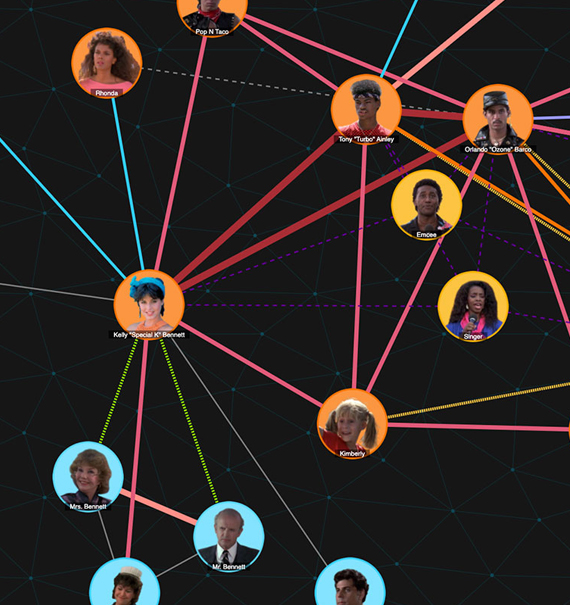

In an installation of the project, viewers can interact with the Boogaloo Bias facial recognition algorithm through one or more live CCTV cameras and see which Breakin’ 2 characters the system finds to be matches. Viewers will see how their matched character can change as they move their head or change facial expressions, revealing how easily this practice can be compromised. The installation can also feature interactive social network analysis (SNA) diagrams and additional videos of protest footage from 2020 and 2021, and/or clips from the movie Breakin’ 2 that have been processed by our facial recognition system.

The interactive experience in Boogaloo Bias demonstrates how unregulated surveillance technology without public oversight can lead to absurdly erroneous results. The project draws from a number of academic and journalistic sources, including a study by the Georgetown Law Center on Privacy and Technology, which found that because there are “no rules when it comes to what images police can submit to face recognition algorithms to generate investigative leads,” agents have been known to substitute not only low-quality images from CCTVs, but hand-drawn forensic sketches, proxy images generated from artist sketches, and images of celebrities thought to resemble a suspect (Angelyn, 2019). The project also reveals problems that arise from using low accuracy thresholds. While some tech companies have stressed that police should use confidence thresholds between 95% to 99%, law enforcement agencies often use low, out-of-the-box accuracy levels of 80% to maximize investigative leads (Wood, 2018; Levin, 2018). The Boogaloo Bias system returns every match, highlighting matches that are above the 80% out-of-the-box threshold, so participants can see the impact of accuracy thresholds on matches the system returns.

Press & citations:

- Framer Framed Podcast: “Digital Doppelgangers: Art and the Failure of Facial Recognition (#19)”

- Dejan Grba, “Computer Vision in Tactical AI Art.” Arts & Communication Journal, 2282.

- Dejan Grba, “Renegade X: Poetic Contingencies in Computational Art,” Proceedings of the 11th Conference on Computation, Communication, Aesthetics & X, 2023.

- Stacia Pelletier, “Boogaloo Bias by Jennifer Gradecki and Derek Curry,” Science Gallery Atlanta, September 12, 2023

- Mrinmayee Bhoot, “Probing the (un)certainties of (un)knowing at Framer Framed’s Amsterdam exhibition,” StirWorld, August 16, 2024

- Paula Rodríguez Sardiñas, “Ik ben, dus ik twijfel — ‘Really? Art and Knowledge in Time of Crisis’ bij Framer Framed,” Metropolis M, May 9, 2024.

- Medium: “A Glimpse Into the Burgeoning World of AI Arts and Media,” Immerse, March 3, 2022.